Shayan Awan

I build stuff

NeuroSpectrum's Journey

It is estimated that 1 in every 5 individuals have some form of dyslexia. Dyslexia is one of the leading learning disabilities, and is commonly not recognized or misdiagnosed. The international Dyslexia Association (IDA) suggests that dyslexia affects approximately 15-20% of the population. Research suggests that dyslexic individuals have a 50% chance of also having Irlen syndrome. While both are completely different conditions, it is quite common for one to be misdiagnosed dyslexia, as they both have similar symptoms.

Irlen Syndrome was discovered recently in the 1980's, some symptoms include:

Headaches/migraines in bright light (including fluorescent light and sunlight)

Eye pain/watering

Attention difficulties

Fatigue when reading

Poor depth perception

Visual distortions

Many individuals with Irlen Syndrome experience symptoms particularly during reading. The contrast of black text on a white background can be challenging due to discomfort, from the white glare reflected off of the paper. For individuals with Irlen syndrome, the recognition of their disorder often initiates during reading. The discomfort they experience can result in the misidentification of dyslexia, which hinders them from receiving proper treatment.

To tackle this problem, I innovated NeuroSpectrum: A Brainwave-Powered Vision Enhancement Device.

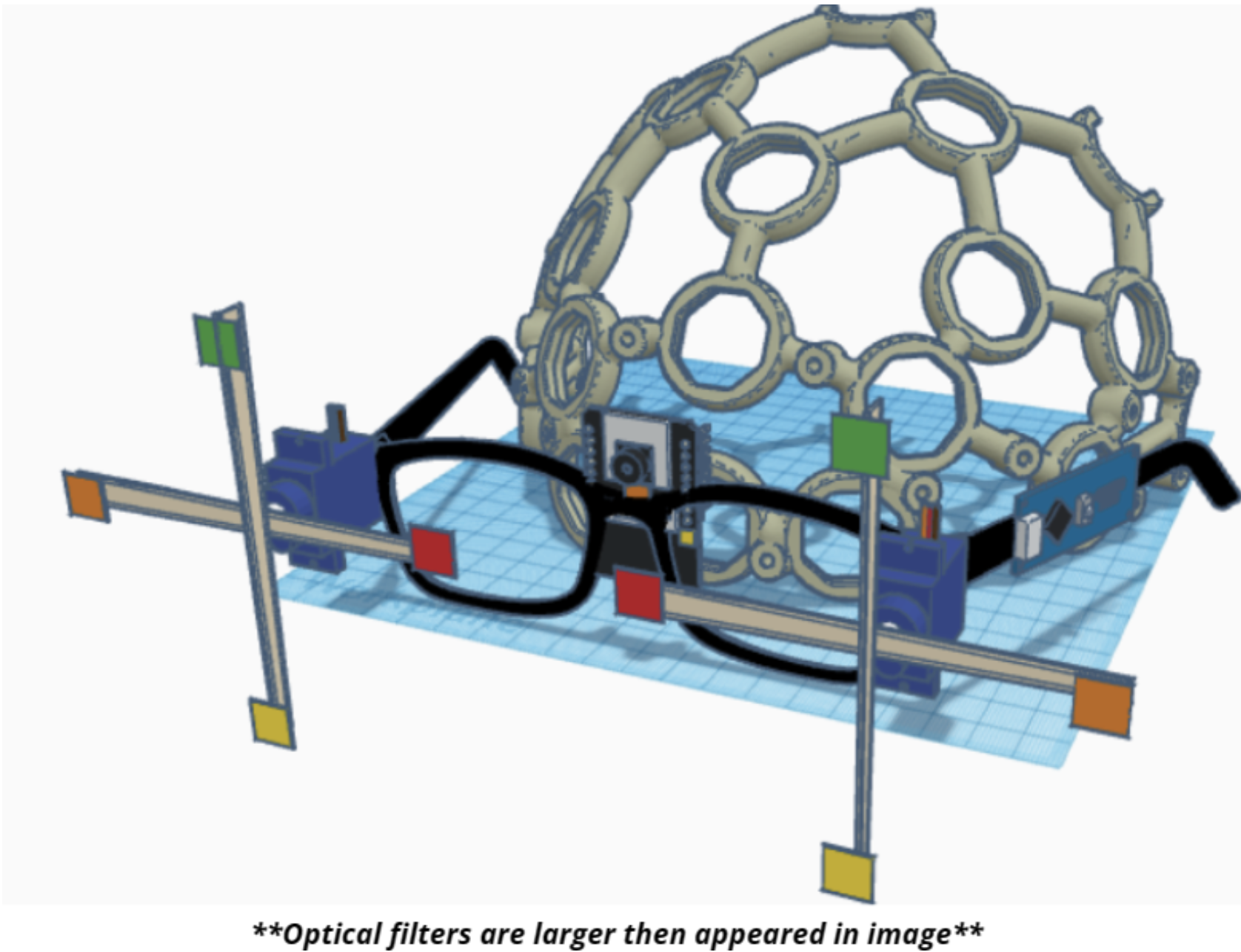

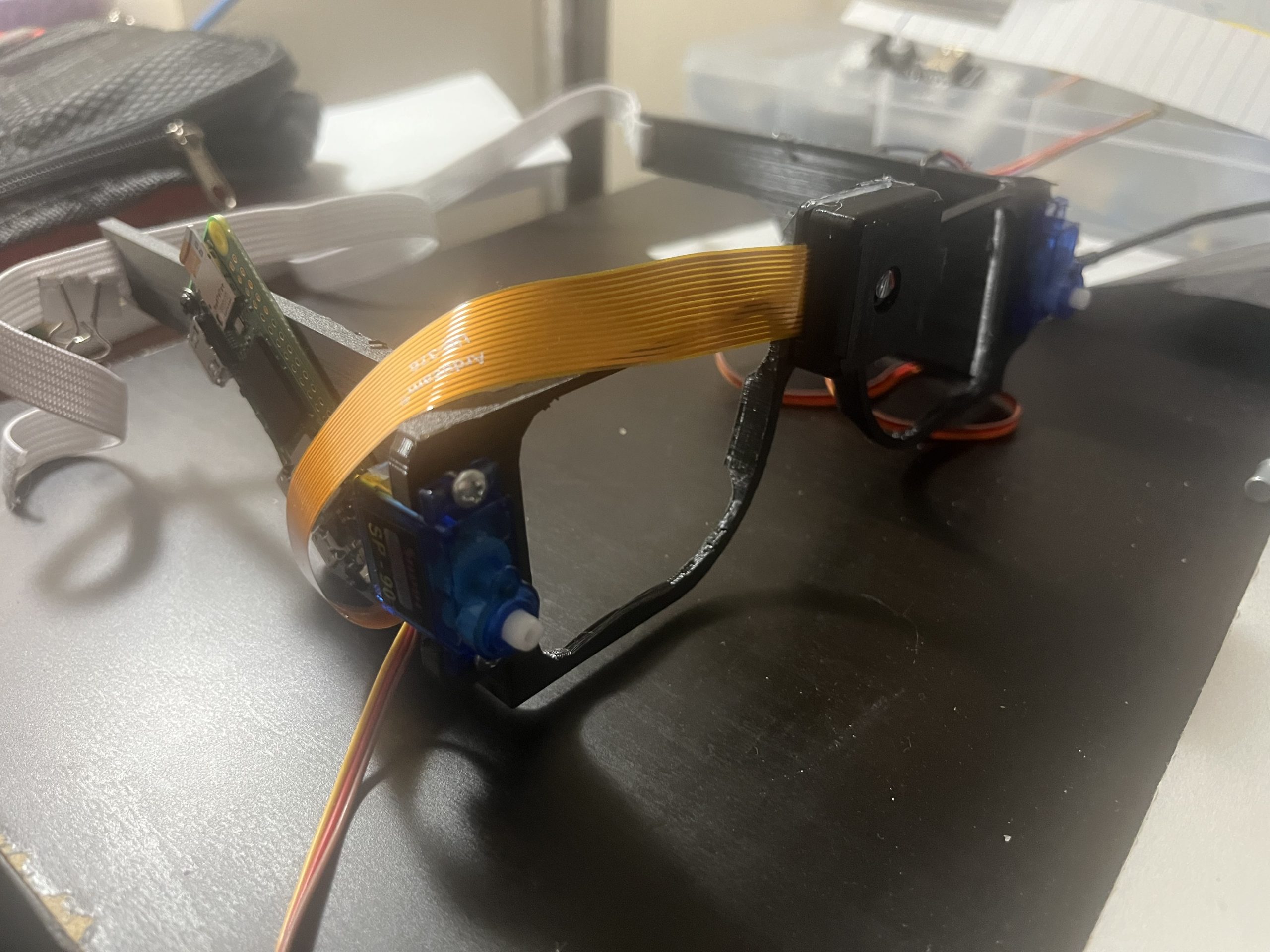

The device utilizes the Ultracortex Mark IV EEG headset, which is Electroencephalogram (EEG) technology from OpenBCI to obtain data from both the frontal lobe and occipital lobe, where visual processing occurs. The innovation also features optical filters attached to a servo motor, which are placed Infront of the oculars.

The data from the headset will be inputted as serial data, which can be recorded and analyzed, in order to swap to the correct filter which assists the user in completing their tasks.

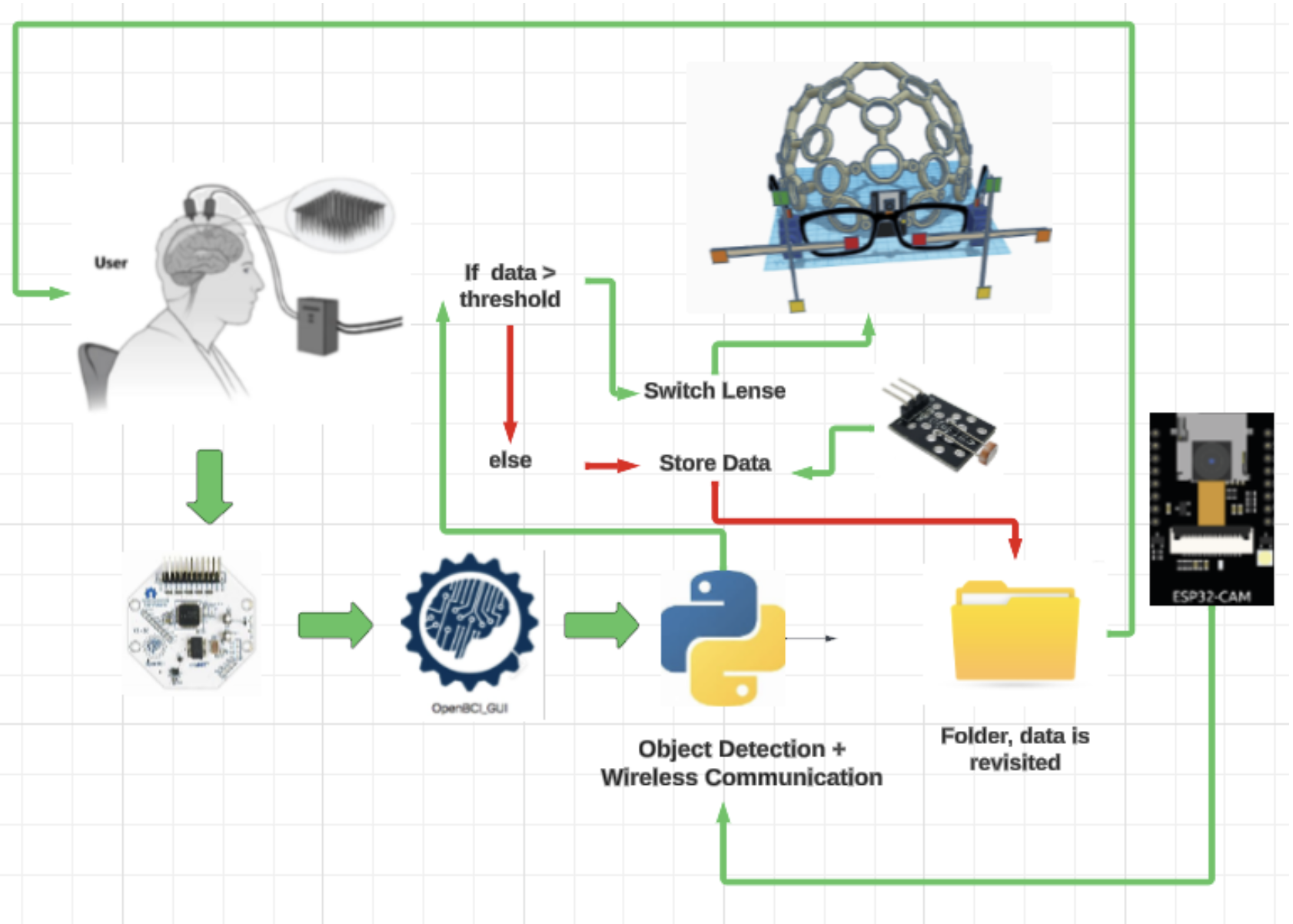

The above diagram explains the overall function of the device, with the following steps:

1. Data acquisition from the brain (EEG)

2. OpenBCI Cyton board converts signal and can be read using the OpenBCI GUI

3. Data is recorded

4. Photoresistor captures intensity of light

5. Data is recorded

6. The camera module uses object detection to identify the task (e.g. reading from a book, reading on a computer screen)

7. The device filters through all the filters

8. The data is then compared, to see which lens gives the least feedback

9. Data is then stored, if the user enters a similar environment & condition the according lens will be swapped to accordingly.

This way, the device is customized to match the users specific needs.

**Updated progress**

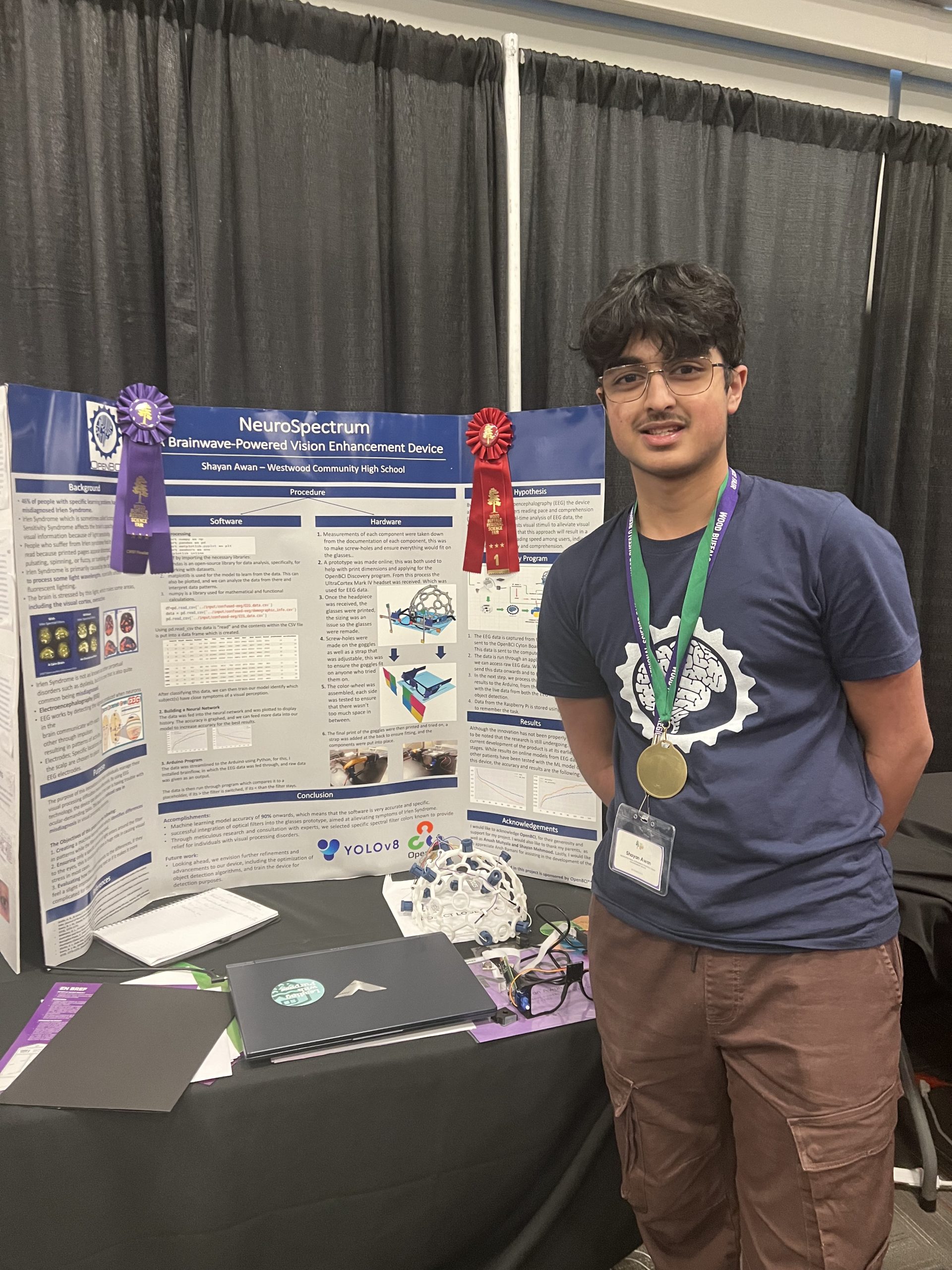

The prototype shown above has been created previous to the school science fair which took place on March 8. From there, the project moved on towards the regional fair which took place on the 15th and 16th. I received a gold medal and was selected to represent our region at the upcoming Canada Wide Science Fair taking place on May 25-June 1!

The main changes:

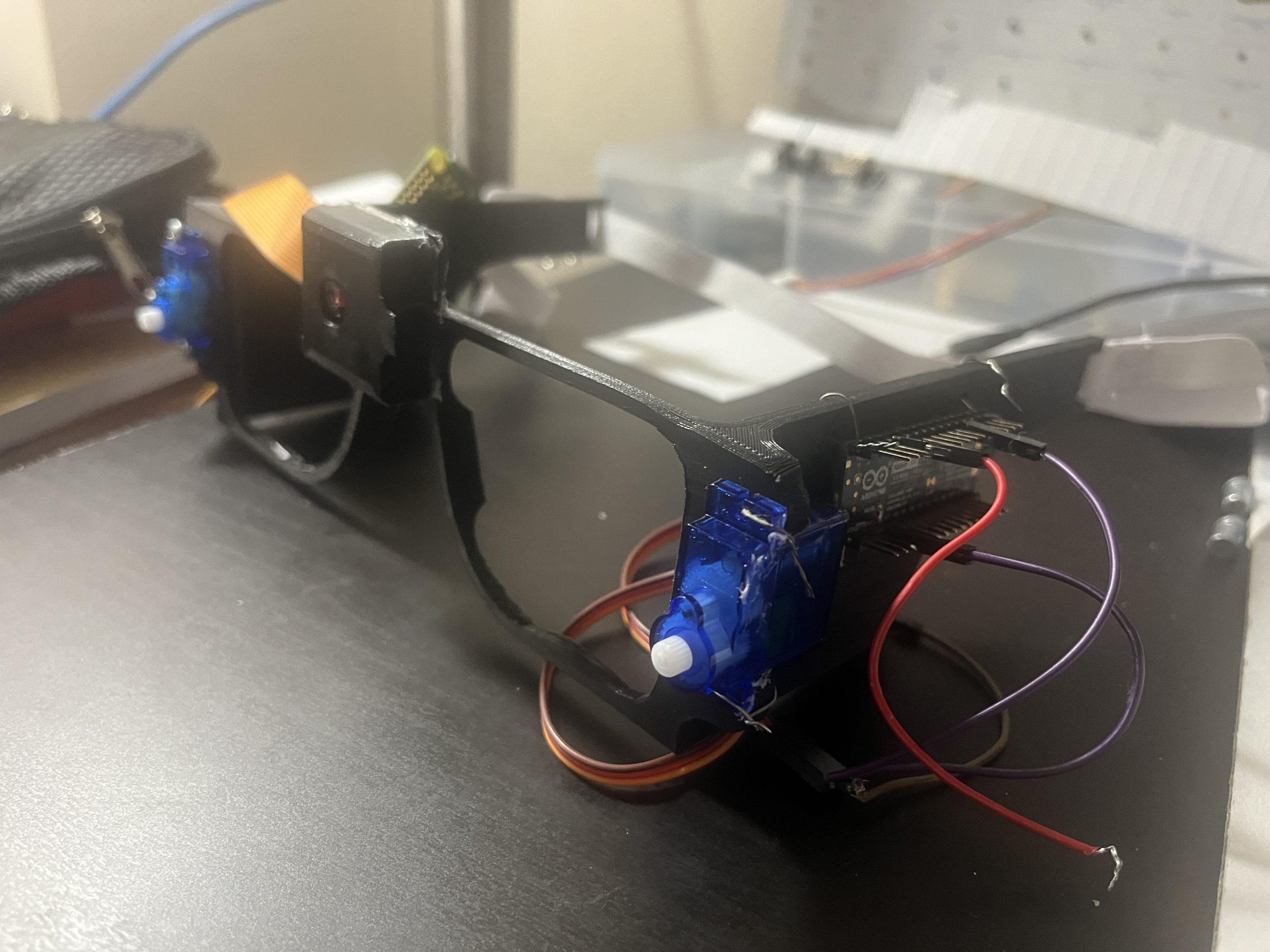

Program: The program was changed, the computing took place on a laptop which the headset was connected to, the EEG data was saved as .csv format with 4 different files, each file having 7 seconds of each filter placed in front of each ocular. This data was then compared and stored based on the task identified by the object detection model.

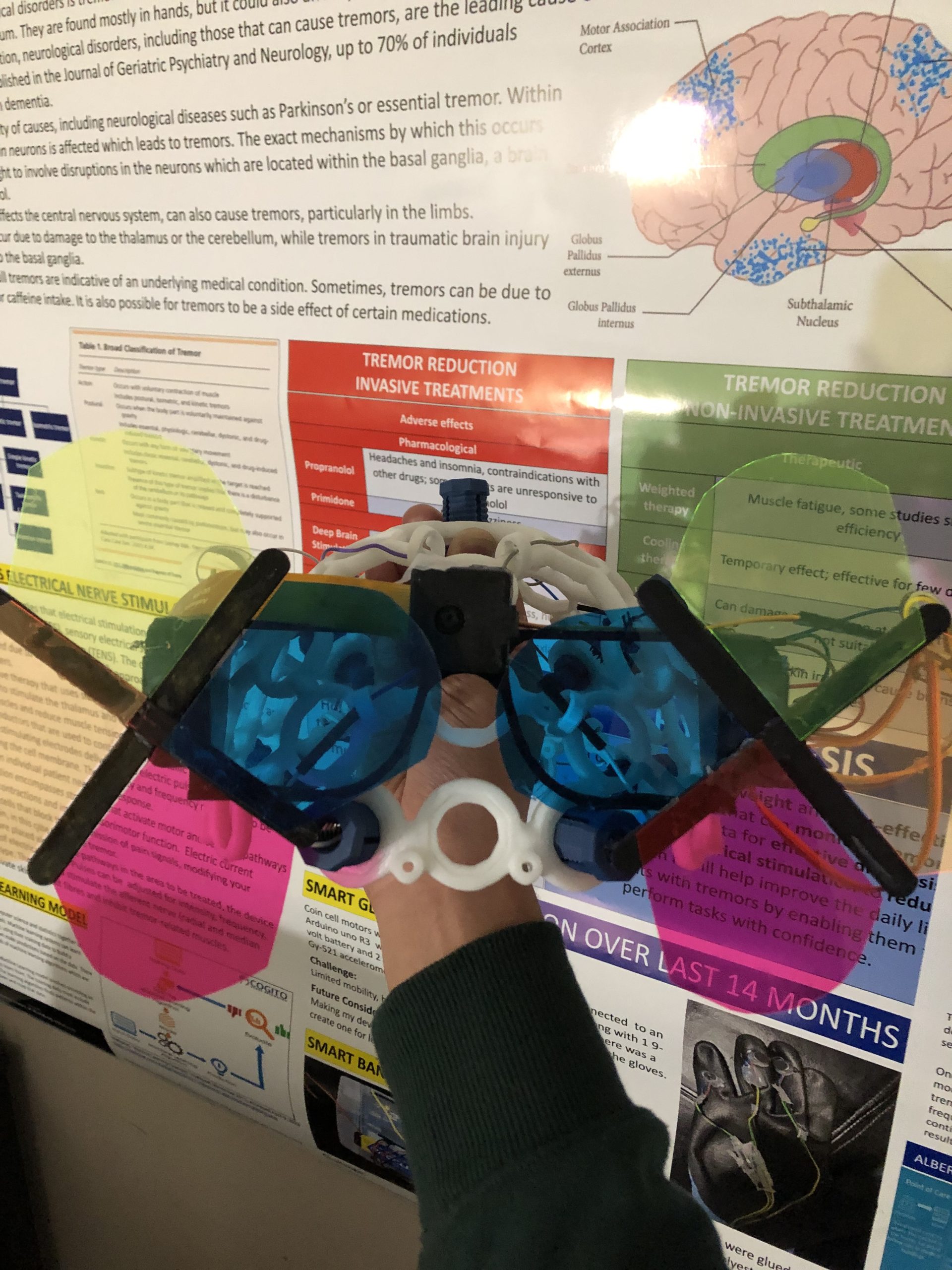

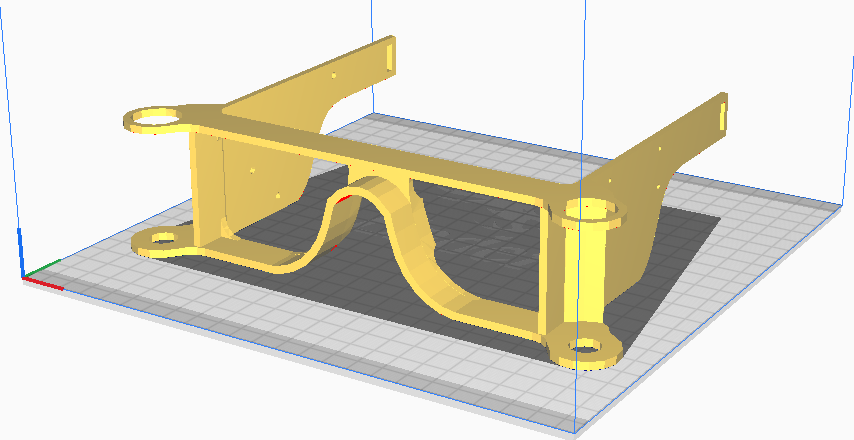

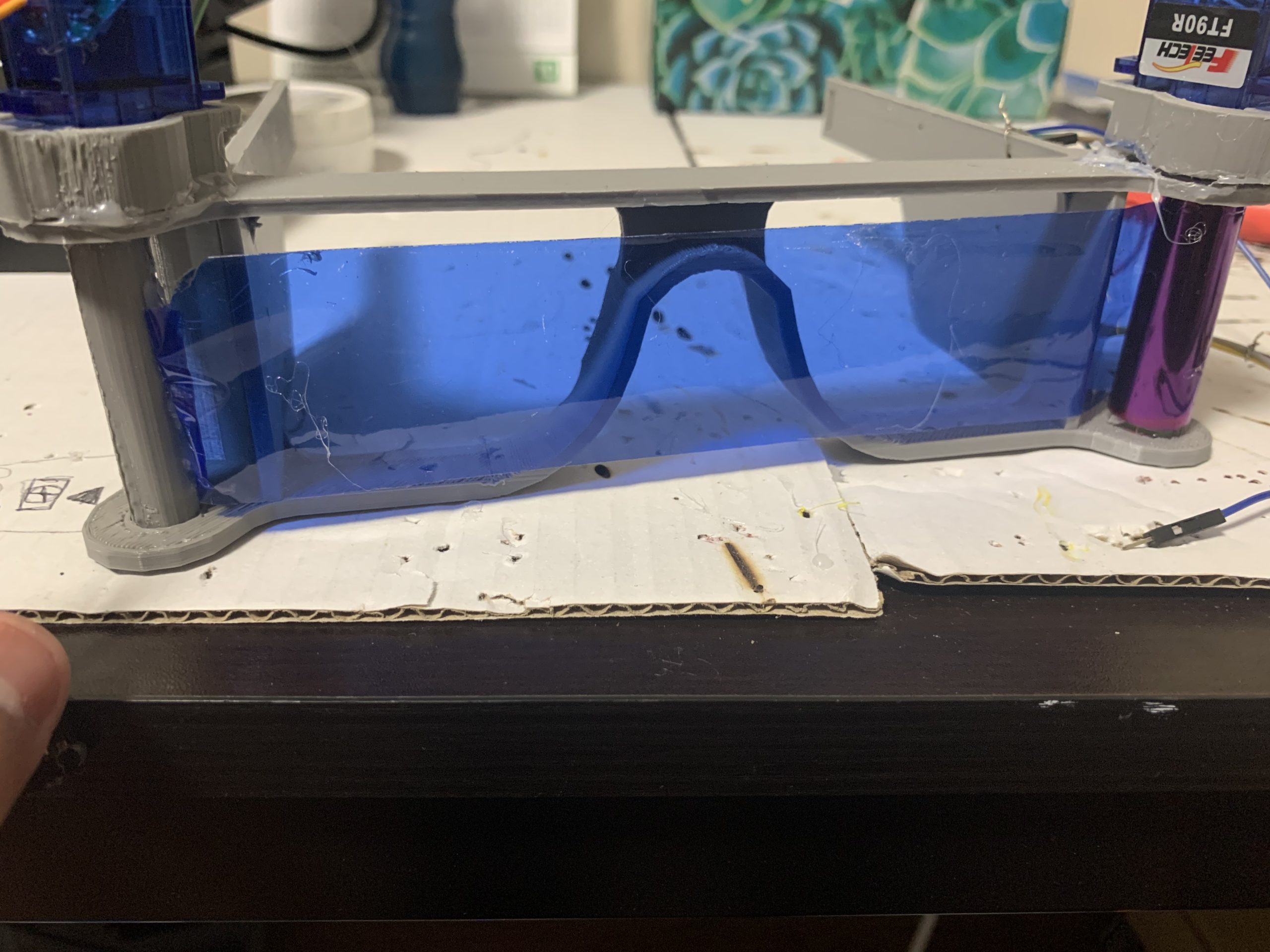

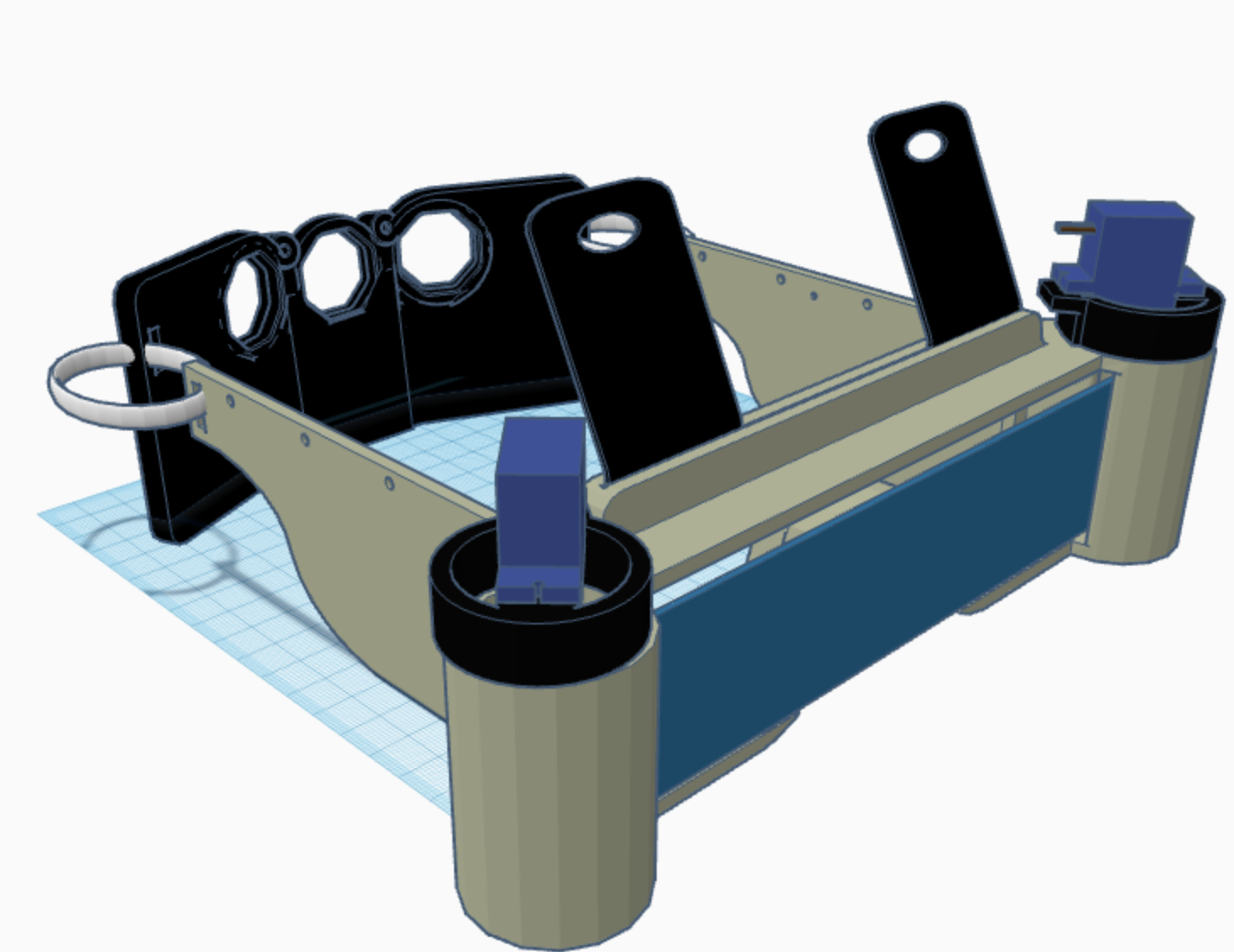

Hardware: The original design of a pai of glasses could not hold the weight of the components. A new design was engineered, this time, having more surface area on the sides with a wide base, shaping the prototype as a pair of goggles.

The device put together without components wired together.

A strap was added to the back so that the goggles had support while the user had them on, this was to ensure fit and that it didn't fall down forwards. The two continuous servos were placed at the ends, having popsicle sticks glued onto the mounts. This was so that there could be a variety of filters used in testing and it helped with portability.

The finished prototype looks like the following:

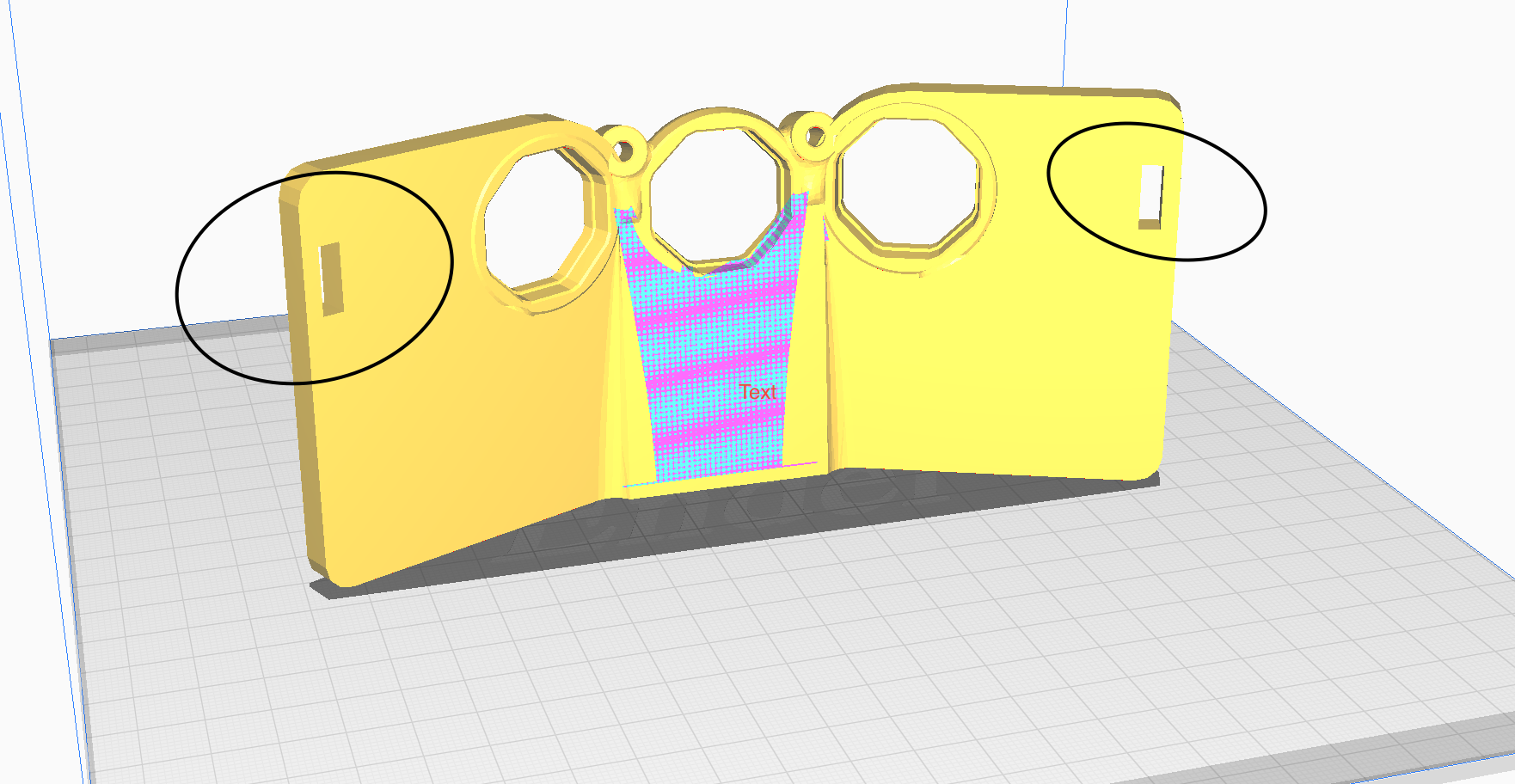

Moving forward from here, the prototype which is currently being worked on is one piece. The STL model for the Ultracortex headset is being edited, only having two electrode spots for the back of the device at the occipital lobe, and two wet electrodes for the frontal lobe.

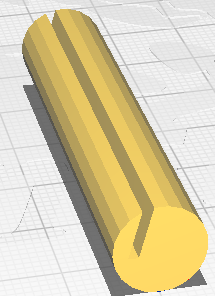

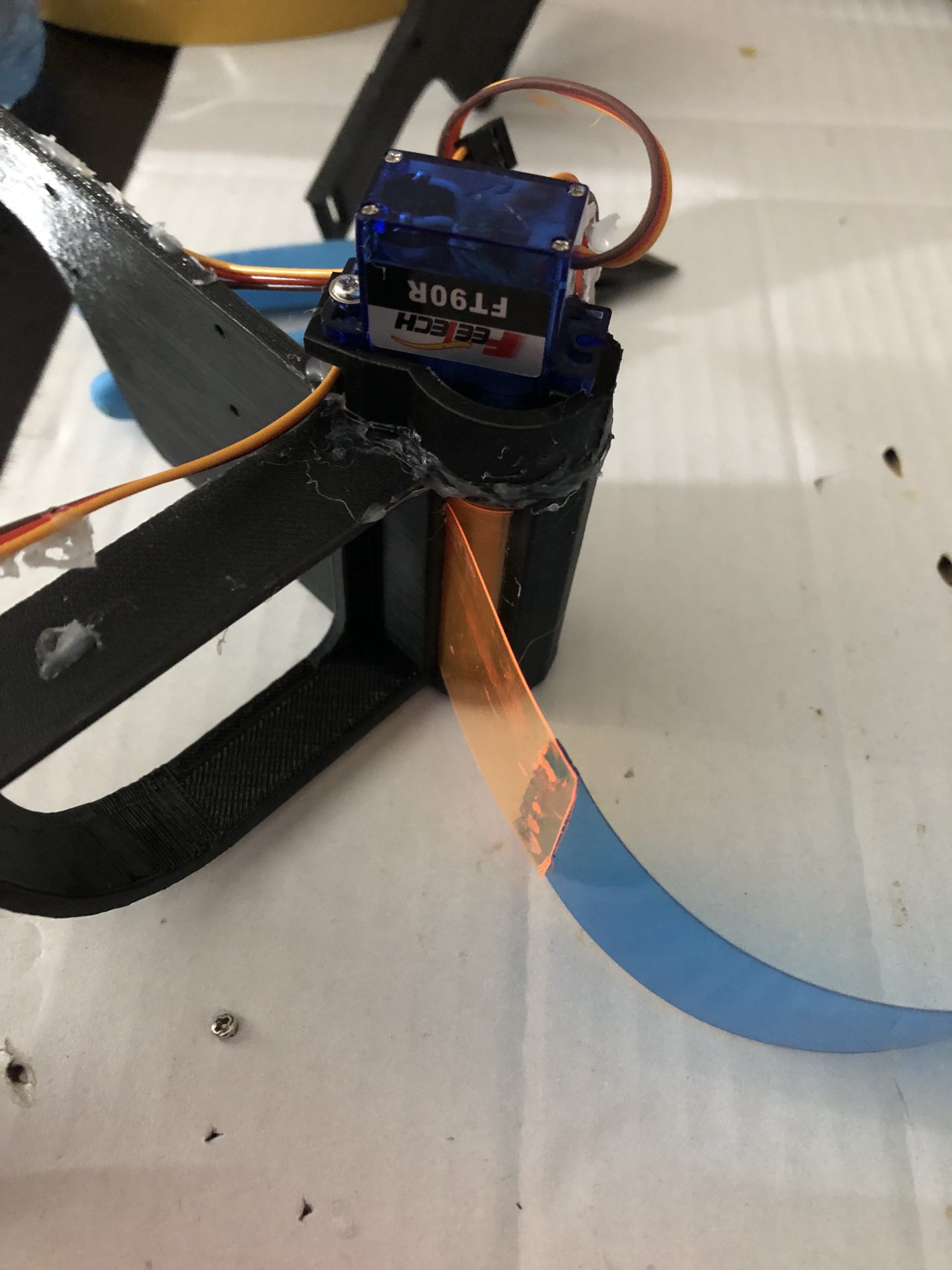

This iteration includes two axels which are placed in between the two spool-holders on each end. The servo motor is placed on top with a custom made bracket:

**Servo Mount**

**Axel**

The filters are all glued to each other, with each end of the reel glued to the inside of the axel. The servo mount is placed on top at the ends and screwed into the axel. This way, the servo motors spin clockwise to swap filters and as they are one big strip, they are wrapped around the axel once they are spun.

The motors are then spun clockwise or counter-clockwise in order to cycle through all the filters.

**Updated progress**

The previous mechanism had several issues, mainly with the spooling system for the filters. The PVC material was not very flexible, which caused too much stress onto the servo motors. This would cause them to fall out of place.

The solution to this was using a thinner material, I chose to use Cellophane instead, which worked very well with my spooling mechanism. The plastic can be found in thin plastic party bags or packaging wrappers.

A custom backplate was also made, this was to reduce the overall size and weight of the device. The electrodes on this backplate went over the O1 and O2 regions (according to the 10-20 system) with a mount for the Cyton board. It was attached to the goggles with a slit to insert an elastic, which added a bit of flexibility for those with bigger/smaller head sizes to wear the goggles.

Two electrode mounts were also added for the device to cover the frontal lobe regions, these were printed with PLA Flex, which added flexibility, as well as a slit where the mounts could slide through, if adjustments are needed.

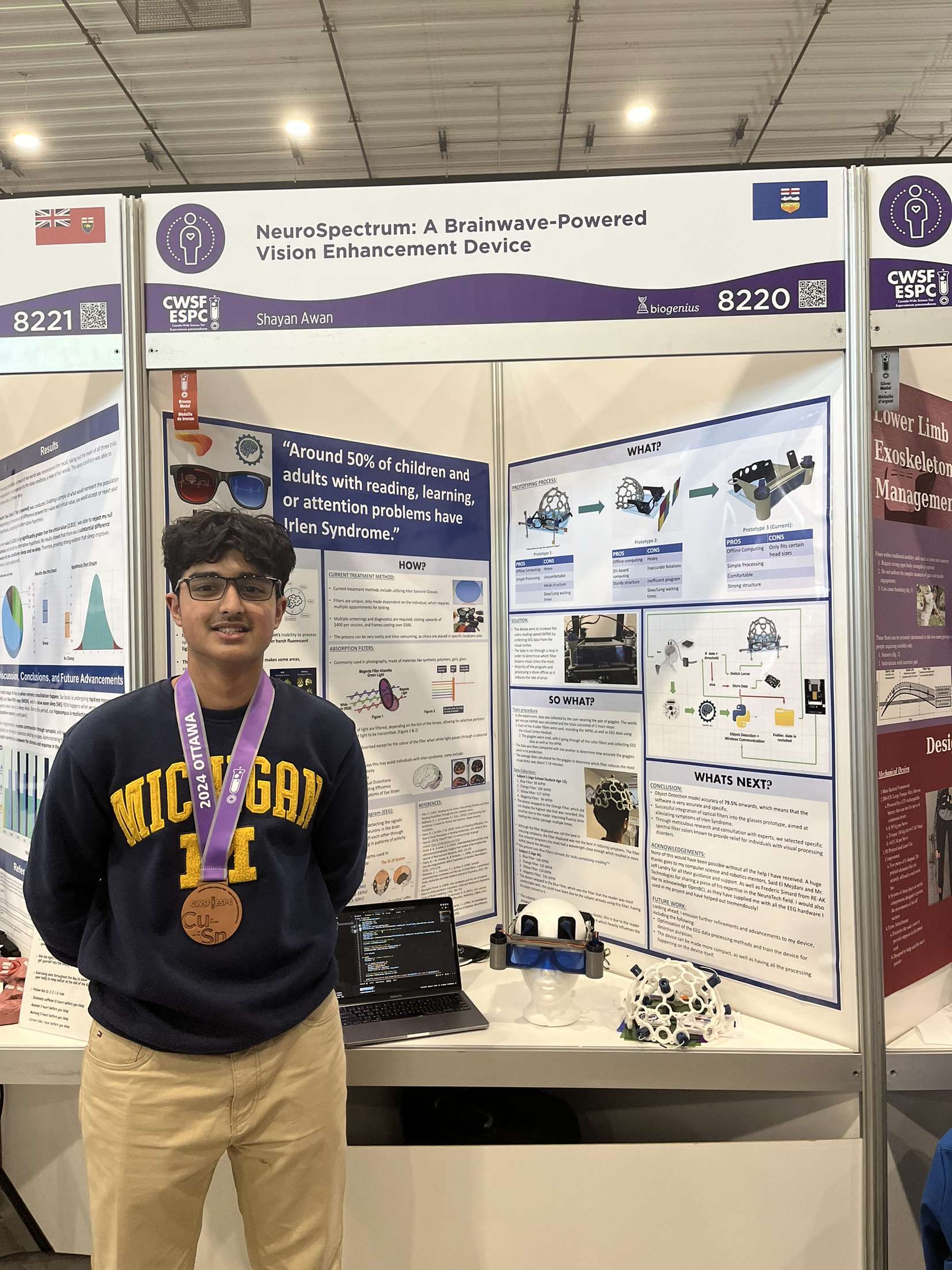

This prototype was presented at the Canada Wide Science Fair, which took place over the course of a week.

At the Canada Wide Science Fair I was presented with a Bronze Medal! The fair was a great experience, I had a great experienced and enjoyed viewing all my fellow BCI innovators projects!

About a few weeks before the fair, I had also received results from the Ingenious+ challenge.

I had the honour of being hosted by Her Honour, the Honourable Salma Lakhani, the Lieutenant Governor of Alberta and the Rideau Hall Foundation / Fondation Rideau Hall. I was recognized along with 10 other Albertans to receive the Ingenious+ Youth Innovation Challenge award!

With the projects massive success, I owe great appreciation to the team at OpenBCI, none of this would be possible at all without their support.

For any questions or to get involved feel free to contact me!

Project Members:

Shayan Awan

Email: shayan.awan.shakeel@gmail.com

LinkedIn: Shayan Awan | LinkedIn

X (Formerly Twitter): https://x.com/ShayanAwan0

Feel free to connect/contact me on these platforms.